Ph.D student, Lab of Data Science, USTC

Supervisors: Prof. Xiang Wang (王翔), Prof. Xiangnan He (何向南)

Research interest: Generative models (diffusion models, LLMs), Alignment, Preference optimization, Recommmendation.

💻 Internships

- 2024.05 - 2024.10, WeChat, Guangzhou, China.

- 2021.10 - 2022.1, Tencent Music Entertainment, Shenzhen, China.

🔥 News

- 2025.04: 🎉🎉 One paper is accepted by SIGIR 2025, working on generative recommendation with diffusion model.

- 2025.01: 🎉🎉 One paper is accepted by ICLR 2025, working on preference optimization in LLM.

- 2024.09: 🎉🎉 Two papers are accepted by NeurIPS 2024, working on preference optimization in LLM and LLM for recommendation.

- 2024.07: 🎉🎉 LLaRA: Large Language-Recommendation Assistant is selected as SIGIR 2024 Best Full Paper Awards Nominee!

- 2024.05: 🎉🎉 Tow papers are accepted by SIGIR 2024 and ACL 2024 respectively, working on LLM for recommendation and molecular relational.

- 2024.01: 🎉🎉 One paper is accepted by WWW 2024, working on fairness of LLM for recommendation.

- 2023.10: 🎉🎉 One paper is accepted by NeurIPS 2023, working on generative recommendation with diffusion model.

- 2023.05: 🎉🎉 One paper is accepted by SIGIR 2023, working on sequential robustness of recommender system.

📝 Publications

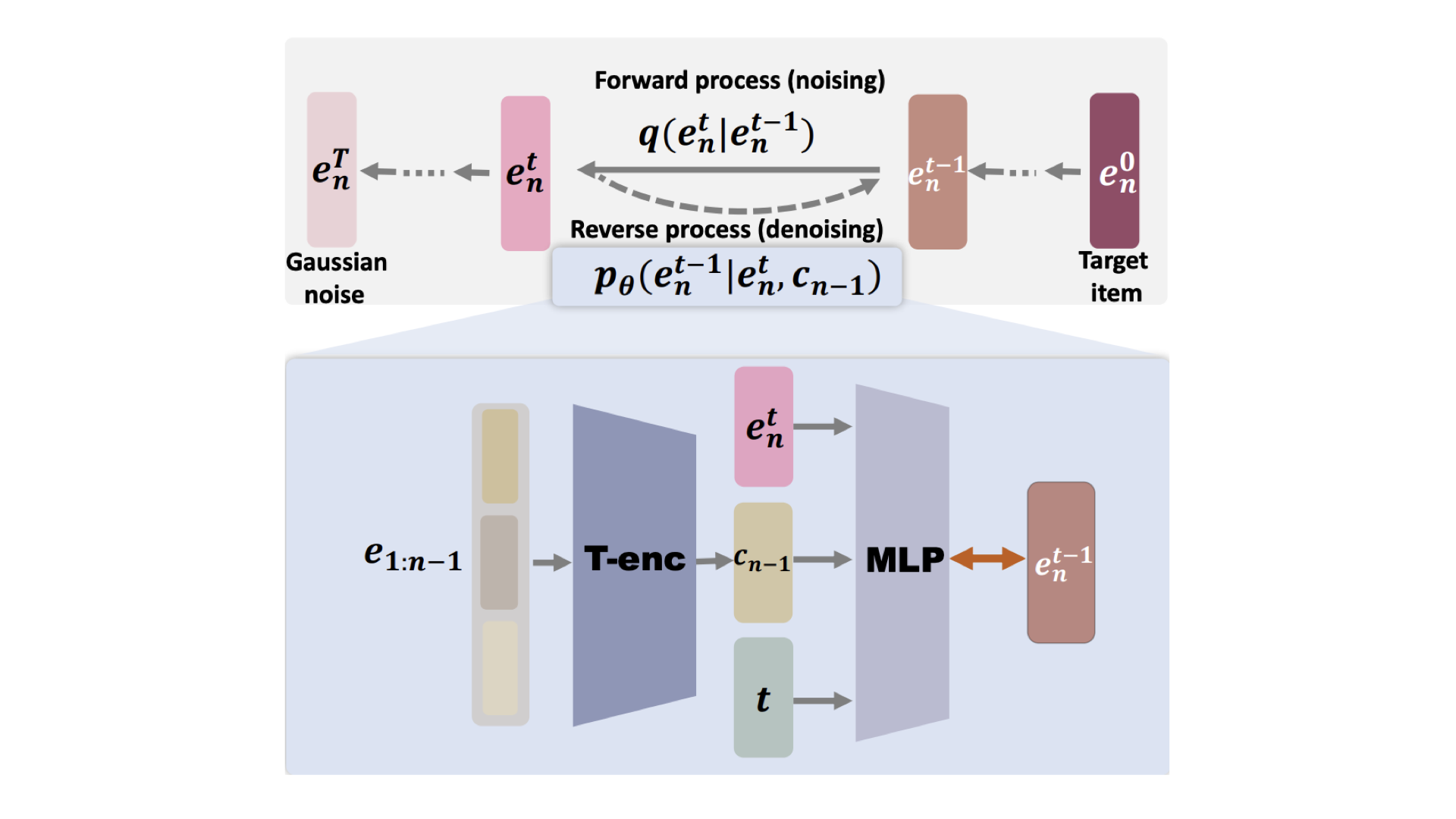

Generate What You Prefer: Reshaping Sequential Recommendation via Guided Diffusion

Zhengyi Yang, Jiancan Wu, Zhicai Wang, Xiang Wang, Yancheng Yuan, Xiangnan He

- Generating user’s oracel target item with diffusion models, guided by user’s interaction sequence.

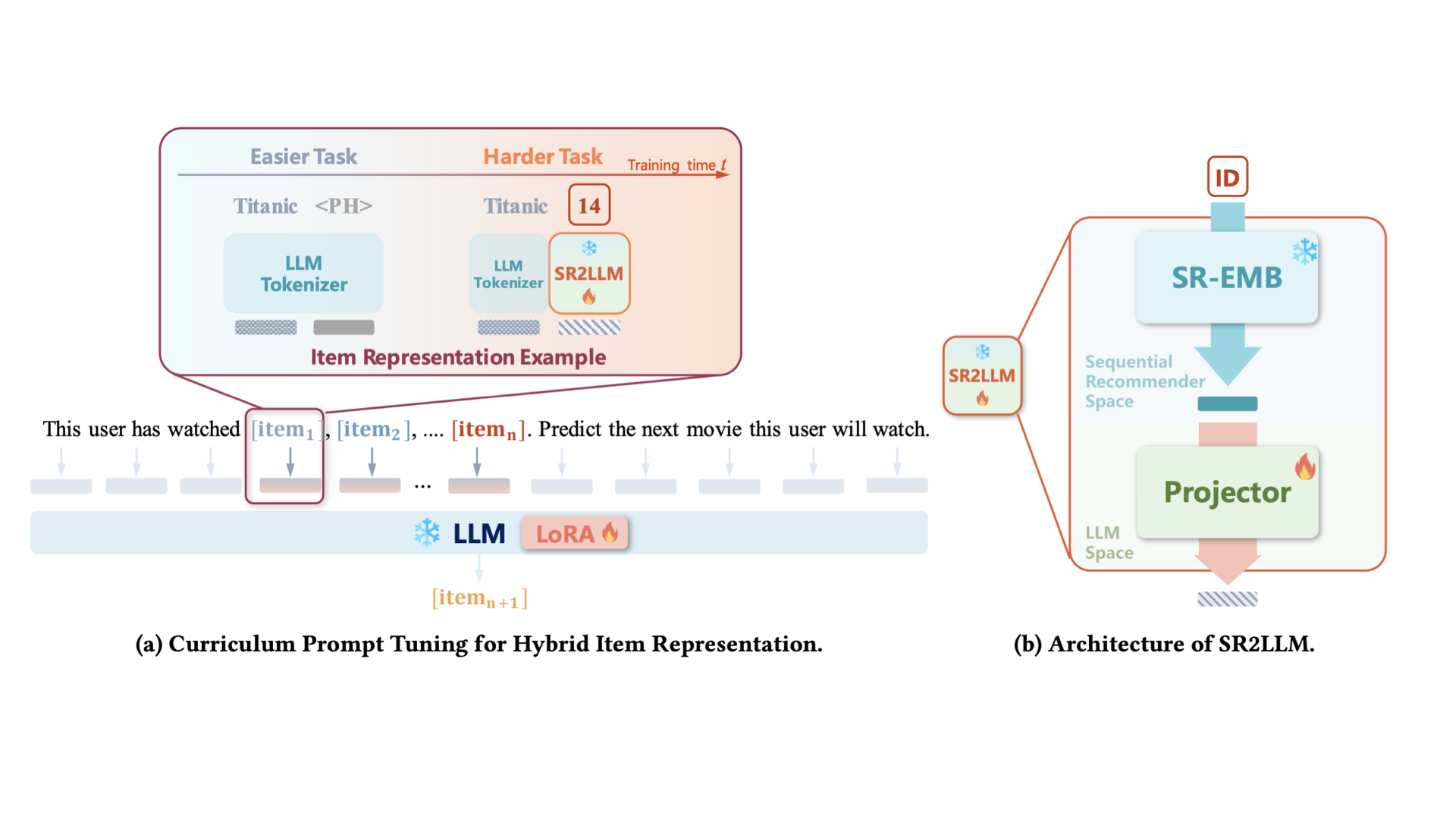

LLaRA: Large Language-Recommendation Assistant

Jiayi Liao, Sihang Li, Zhengyi Yang, Jiancan Wu, Yancheng Yuan, Xiang Wang, Xiangnan He

- Integrating modality information of pretrained recommenders into LLM for downstream task.

-

A Generic Learning Framework for Sequential Recommendation with Distribution Shifts,Zhengyi Yang, Xiangnan He, Jizhi Zhang, Jiancan Wu, Xin Xin, Jiawei Chen, Xiang Wang, in SIGIR 2023.

-

Large Language Model can Interpret Latent Space of Sequential Recommender, Zhengyi Yang, Jiancan Wu, Yanchen Luo, Jizhi Zhang, Yancheng Yuan, An Zhang, Xiang Wang, Xiangnan He.

-

Uncertainty-aware Guided Diffusion for Missing Data in Sequential Recommendation, Wenyu Mao, Zhengyi Yang,Jiancan Wu, Haozhe Liu, Yancheng Yuan, Xiang Wang, Xiangnan He, in SIGIR 2025.

-

Towards robust alignment of language models: Distributionally robustifying direct preference optimization, Junkang Wu, Yuexiang Xie, Zhengyi Yang, Jiancan Wu, Jiawei Chen, Jinyang Gao, Bolin Ding, Xiang Wang, Xiangnan He, in ICLR 2025.

-

$\beta$-DPO: Direct Preference Optimization with Dynamic $\beta$, Junkang Wu, Yuexiang Xie, Zhengyi Yang, Jiancan Wu, Jinyang Gao, Bolin Ding, Xiang Wang, Xiangnan He, in NeurIPS 2024.

-

On Softmax Direct Preference Optimization for Recommendation, Yuxin Chen, Junfei Tan, An Zhang, Zhengyi Yang, Leheng Sheng, Enzhi Zhang, Xiang Wang, Tat-Seng Chua, in NeurIPS 2024.

-

A Bi-step Grounding Paradigm for Large Language Models in Recommendation Systems, Keqin Bao, Jizhi Zhang, Wenjie Wang, Yang Zhang, Zhengyi Yang, Yancheng Luo, Fuli Feng, Xiangnan He, Qi Tian, in TORS 2025.

-

Item-side Fairness of Large Language Model-based Recommendation System, Meng Jiang, Keqin Bao, Jizhi Zhang, Wenjie Wang, Zhengyi Yang, Fuli Feng, Xiangnan He, in WWW 2024.

-

MolTC: Towards Molecular Relational Modeling In Language Models, Junfeng Fang, Shuai Zhang, Chang Wu, Zhengyi Yang, Zhiyuan Liu, Sihang Li, Kun Wang, Wenjie Du, Xiang Wang, in Findings of ACL 2024.

📖 Educations

- 2020.06 - now, Ph.D candidate, University of Science and Technology of China.

- 2016.09 - 2020.06, BEng, School of the Gifted Young, University of Science and Technology of China.